Orazio Gallo

I am interested in a variety of topics mostly related to computer vision and machine learning though,

as of today, I was able to work on a much smaller subset of that. This page provides a brief description

of my published projects, from most to least recent.

I started being fascinated by the human vision system and specifically by attention and eye movements: in 2003 I worked on my Master thesis in Prof. Lawrence W. Stark lab at the University of California, Berkeley. My research focused on combining image processing algorithms to predict eye fixations on images (more).

In 2004 I joined Dr. Joel M. Miller lab at the Smith-Kettlewell Eye Research Institute (I also am the proud holder of the grappa bottle in the small picture in the left menu bar of the lab website :)

I worked on the image processing and computer vision aspects of Dr. Miller's Gold Bead Tissue Markers (GBTM) imaging method, a novel technique that has the capability of detecting within-tissue micrometric motion (more).

I also participated in the improvement of ocular torsion detection and measurement (more).

In 2006 I enrolled in the computer engineering Ph.D. program at the University of California, Santa Cruz. My adviser here is Prof. R. Manduchi.

My current research focuses on:

Computer vision algorithms for embedded devices.

Computational photography.

The HPU

J. Davis, J. Arderiu, H. Lin, Z. Nevins, S. Schuon, O. Gallo, and M. Yang, CVPR 2010.

pdf

Computer-mediated, human micro-labor markets have so far been treated as novelty services good for cheaply labeling training data and easy user studies. This paper claims that these markets can be characterized as Human co-Processing Units (HPU), and represent a first class computational platform. In the same way that Graphics Processing Units (GPU) represent a change in architecture from CPU based computation, HPU-based computation is different and deserves careful characterization and study. We demonstrate the value of this claim by showing that simplistic HPU computation can be more accurate, as well as cheaper, than complex CPU-based algorithms on some important computer vision tasks.

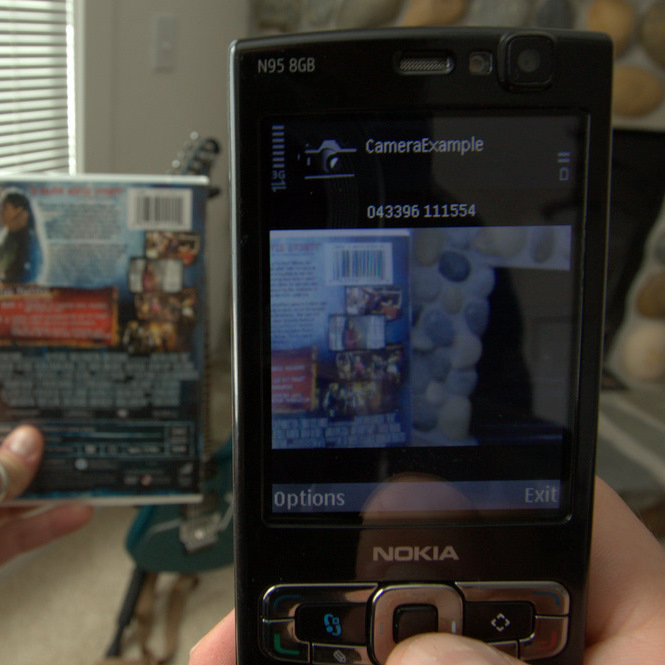

Reading Challenging Barcodes with Cameras

O. Gallo and R. Manduchi, WACV 2009.

pdf bibtex project page

Current camera-based barcode readers do not work well when the image has low resolution, is out of focus, or is motion-blurred. One main reason is that virtually all existing algorithms perform some sort of binarization, either by gray scale thresholding or by finding the bar edges. We propose a new approach to barcode reading that never needs to binarize the image. Instead, we use deformable barcode digit models in a maximum likelihood setting. We show that the particular nature of these models enables efficient integration over the space of deformations. Global optimization over all digits is then performed using dynamic programming.

Artifact-free High Dynamic Range Imaging

O. Gallo, N. Gelfand, W. Chen, M. Tico, K. Pulli, ICCP 2009.

pdf (14MB) reduced pdf (<1MB) bibtex project page

High Dynamic Range (HDR) images can be generated by taking multiple exposures of the same scene. When fusing information from different images, however, the slightest change in the scene can generate artifacts which dramatically limit the potential of this solution. We present a technique capable of dealing with a large amount of movement in the scene: we find, in all the available exposures, patches consistent with a reference image previously selected from the stack. We generate the HDR image by averaging the radiance estimates of all such regions and we compensate for camera calibration errors by removing potential seams.

A camera-based pointing interface for mobile devices

O. Gallo, S. Arteaga, J. E. Davis, ICIP 2008.

pdf bibtex project page

As the applications delivered by cellular phones are becoming increasingly sophisticated, the importance of choosing an input strategy is also growing. Touch-screens can simplify navigation by far but the vast majority of phones on the market are not equipped with them. Cameras, on the other hand, are widespread even amongst low-end phones: in this paper we propose a vision-based pointing system that allows the user to control the pointer's position by just waving a hand, with no need for additional hardware.

Robust curb and ramp detection for safe parking using the Canesta TOF camera

O. Gallo, R. Manduchi, A. Rafii, CVPR 2008.

pdf bibtex project page

In this paper we present a study concerning the use of the Canesta TOF camera for recognition of curbs and ramps. Our approach is based on the detection of individual planar patches using CC-RANSAC, a modified version of the classic RANSAC robust regression algorithm. Whereas RANSAC uses the whole set of inliers to evaluate the fitness of a candidate plane, CC-RANSAC only considers the largest connected components of inliers. We provide experimental evidence that CC-RANSAC provides a more accurate estimation of the dominant plane than RANSAC with a smaller number of iterations.

Stability of gold bead tissue markers

J.M. Miller, E.A. Rossi, M. Wiesmair, D.E. Alexander, and O. Gallo, Journal of Vision, 2006.

link

Significant soft tissue features, in particular in the orbit, may not be resolved by MRI or any other imaging method. Spatial resolution may not be the only limitation: existing techniques lack the ability of providing information about shears or deformations within tissues. We describe a new method that uses micrometric gold beads as markers to visualize movements of such tissues with high spatial resolution (~100 µm) and moderate temporal resolution (~100 ms).

3-D video oculography in monkeys

L. Ai, O. Gallo, D.E. Alexander, J.M. Miller, ARVO 2006.

Eye tracking is crucial in many research areas. While there is a number of techniques that can detect horizontal and vertical position reliably, ocular torsion is still of difficult determination. Eye coils may represent a solution to this problem when avoiding implanting in the orbit is not crucial. Video oculography (VOG) can exploit the presence of iris crypts to estimate torsion but is unreliable when said texture is too smooth, such as in the case of primates. We propose to use VOG in combination with scleral markers of different shapes and locations to improve robustness.

Combining conspicuity maps for hROIs prediction

C.M. Privitera, O. Gallo, G. Grimoldi, T. Fujita, and L.W. Stark, ECCV 2004.

pdf

When looking at an image, we alternate rapid eye movements (saccades) and fixations; the resulting sequence is referred to as scanpath and is crucial for us to perceive a much larger region of the scene than the area that projects on the fovea. In this paper we show that scanpaths exhibit a degree of predictability that can then be exploited for porpuses such as smart image compression. This paper shows that it is possible, for a given class of images, to define an optimal combination of image processing algorithms to improve the prediction of the fixations loci.

last updated 04-26-2010