| |

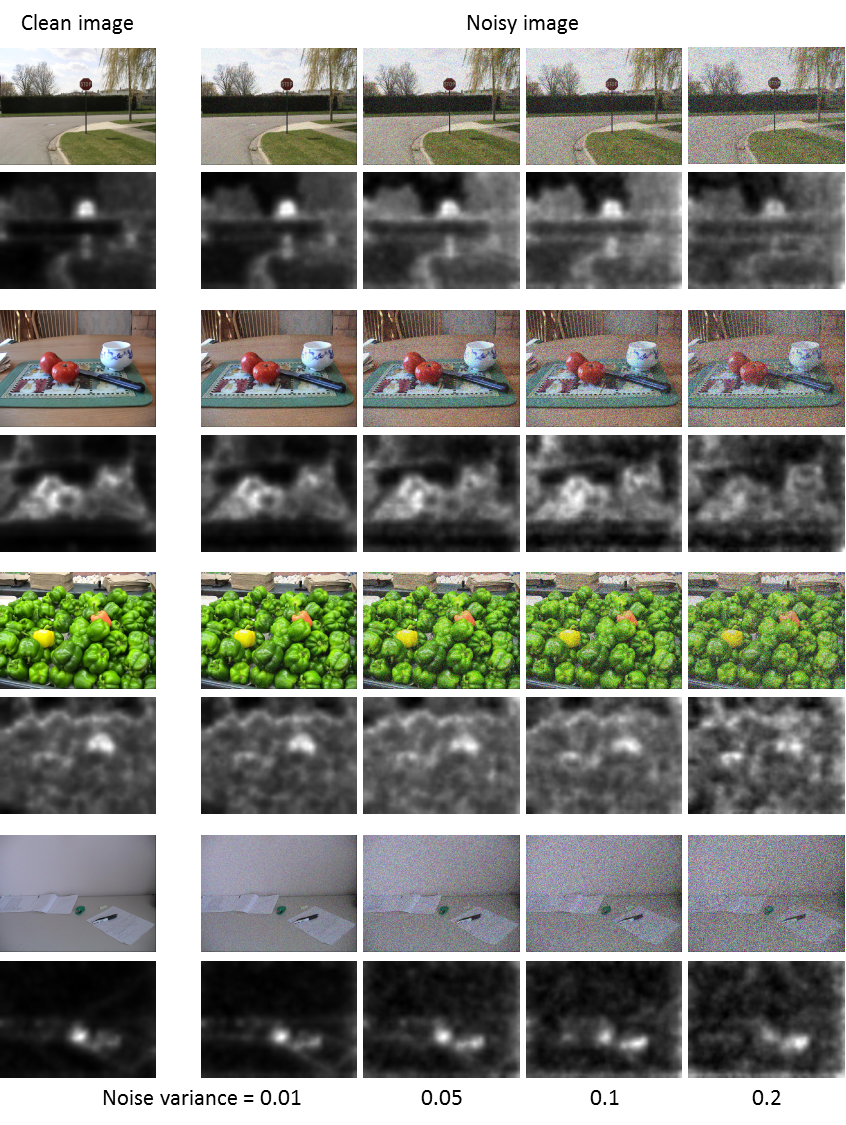

The human visual system possesses the remarkable ability to pick out salient objects in images. Even more impressive is its ability to do the very same in the presence of disturbances. In particular, the ability persists despite the presence

of noise, poor weather, and other impediments to perfect vision. Meanwhile, noise can significantly degrade the accuracy of automated computational saliency detection algorithms. In this paper we set out to remedy this shortcoming. Existing

computational saliency models generally assume that the given image is clean and a fundamental and explicit treatment of saliency in noisy images is missing from the literature. Here we propose a novel and statistically sound method for

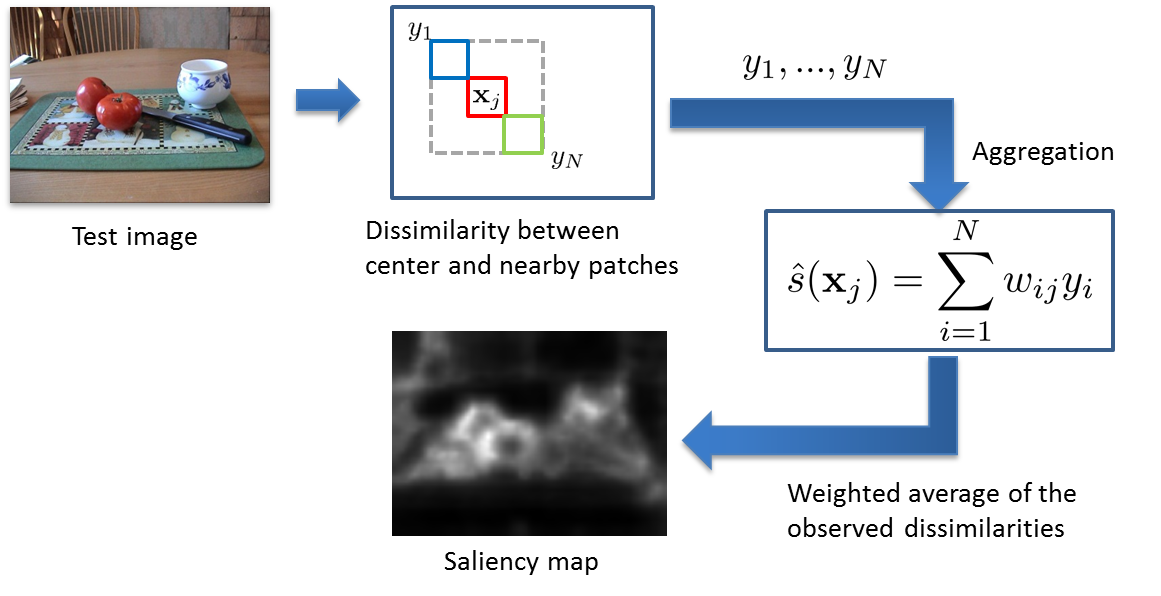

estimating saliency based on a non-parametric regression framework, and investigate the stability of saliency models for noisy images and analyze how state-of-the-art computational models respond to noisy visual stimuli. The proposed model

of saliency at a pixel of interest is a data-dependent weighted average of dissimilarities between a center patch around that pixel and other patches. In order to further enhance the degree of accuracy in predicting the human fixations and of stability

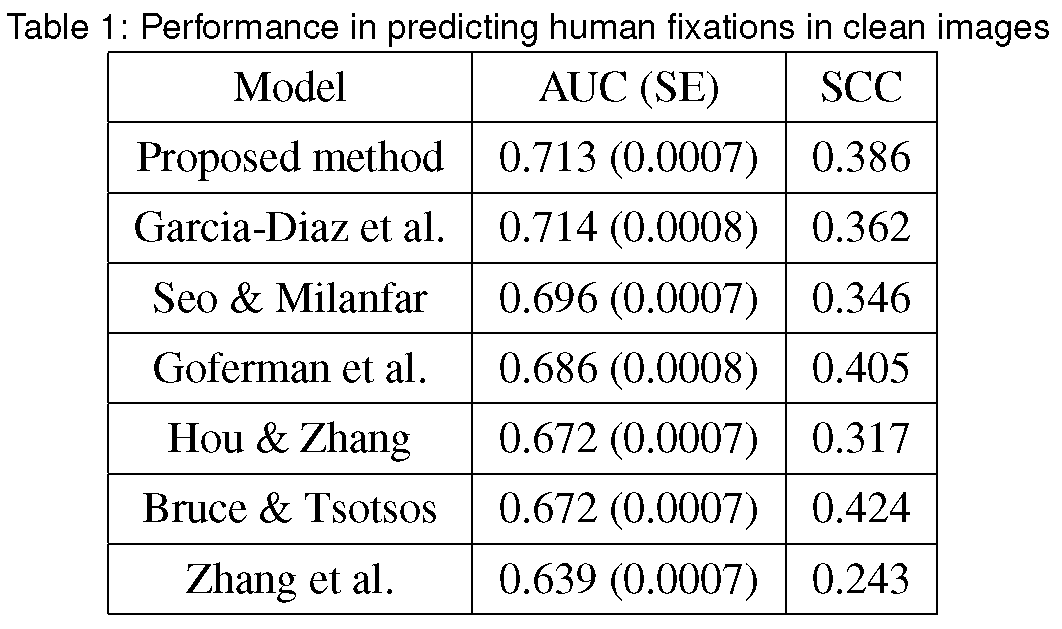

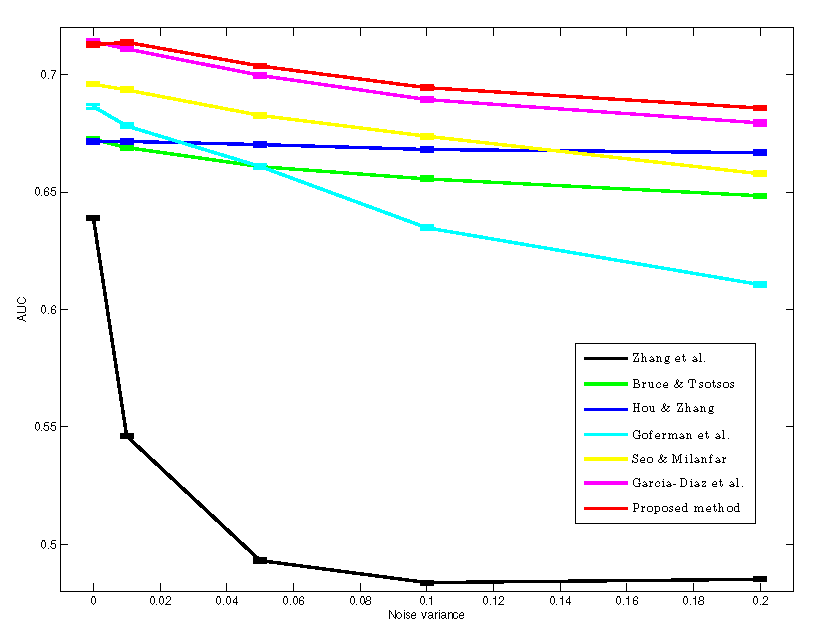

to noise, we incorporate a global and multi-scale approach by extending the local analysis window to the entire input image, even further to multiple scaled copies of the image. Our method consistently outperforms six other state-of-the-art models

for both noise-free and noisy cases.

Overview

Experimental Results

Matlab Toolbox (New release)

See more details and examples in the following papers.

|

| |

The package contains the software that can be used to compute the visual saliency map, as explained in the JoV paper above.

The included demonstration files (demo*.m) provide the easiest way to learn how to use the code.

Disclaimer: This is experimental software. It is provided for non-commercial research purposes only. Use at your own risk. No warranty is implied by this distribution. Copyright ©

2010 by University of California.

SaliencyCode.zip<

File updated: Mar 13 2013

|